Securing Inter-Agent Communication in Syncloop AI Environments

Posted by: Daniel Taylor | October 21, 2025

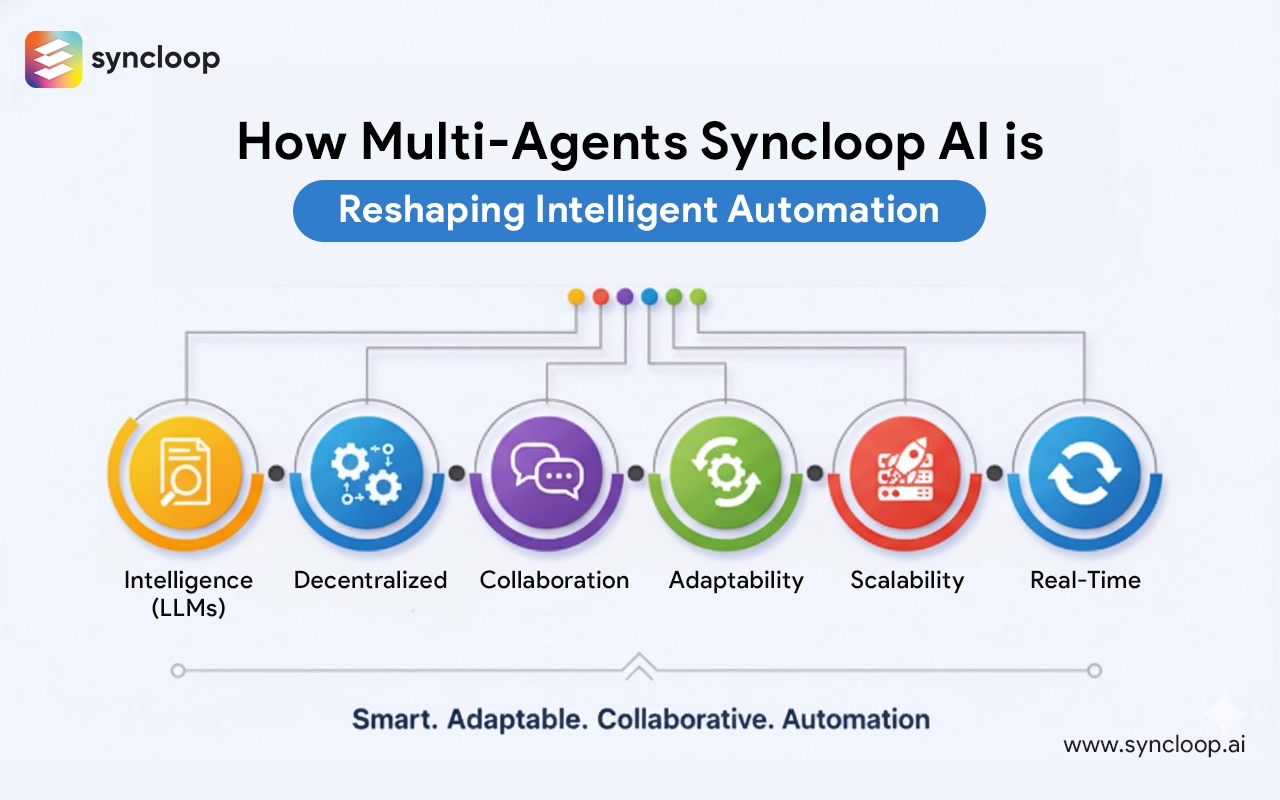

As organizations embrace multi-agent architectures to automate complex workflows, the conversation around capability naturally shifts to trust. Agents coordinate, reason, and act autonomously — but when those agents exchange data and instructions across networks and knowledge bases, the security stakes become existential. A misconfigured API, an exposed credential, or an unverified data source can turn intelligent automation into a liability.

Syncloop AI is built to enable powerful, API-driven agent collaboration. Securing that collaboration is not an afterthought but a design imperative. This article explores why inter-agent security matters, the core principles that should govern agent-to-agent interactions, and practical approaches to harden Syncloop AI environments so teams can safely scale intelligence across the enterprise. The goal is simple: enable confident automation that respects data integrity, privacy, and business intent — while remaining flexible enough to evolve.

Why securing inter-agent communication matters

Multi-agent systems derive their value from connectivity. Agents depend on one another for context, verification, and action. That same connectivity, however, expands the attack surface in ways traditional applications don’t face:

- A compromised agent can exfiltrate sensitive data or issue unauthorized actions at scale.

- Unauthenticated or unaudited API calls between agents can create subtle chains of misbehavior that are hard to detect.

- Inaccurate or malicious inputs to a knowledge retriever can lead to confidently wrong outputs (a variant of supply-chain attacks for LLMs).

- Regulatory and contractual obligations often demand traceability and proof of consent for data access and processing.

For organizations that rely on automation for customer service, finance, healthcare, or legal workflows, the cost of not implementing rigorous inter-agent security is operational, reputational, and sometimes legal. Securing these channels preserves trust — between systems, and between people and the automation that augments their decisions.

Core principles for secure inter-agent communication

Security in multi-agent environments should be guided by a set of clear, pragmatic principles that inform every design and operational choice:

- Least privilege: Give each agent the minimum permissions necessary to perform its role. Avoid broad, shared credentials.

- Zero trust by default: Do not implicitly trust any agent, network segment, or service. Authenticate and authorize every interaction.

- Defense in depth: Combine network protections, cryptographic guarantees, runtime isolation, and governance controls to create layered protection.

- Provable provenance: Maintain tamper-evident traces showing which agent accessed which data and why — for auditability and root cause analysis.

- Separation of duties: Split responsibilities (retrieval, reasoning, verification, execution) across agents to reduce the blast radius of any single compromise.

- Human oversight where required: Preserve points where humans can intervene, review, or override decisions for high-risk actions.

- Continuous validation: Treat security as dynamic — monitor, test, and update controls as agents and data sources evolve.

These principles are practical guardrails that map directly onto capabilities within Syncloop AI and typical enterprise infrastructure.

Implementing security in Syncloop AI

Syncloop AI’s API-first architecture makes it possible to bake robust security practices into agent interactions from the outset. Below are key controls and architectural patterns to apply.

Authentication and strong identity

Use strong, short-lived credentials for agent identities rather than long-lived static keys. Employ mutual TLS (mTLS) where feasible so both caller and callee verify each other’s identity at the transport layer.

Integrate with corporate identity providers (OIDC, SAML) and automated identity lifecycle management to onboard/offboard agents as services or developers join and leave teams.

Issue scoped tokens tied to an agent’s role and environment (dev, staging, prod) to reduce accidental cross-environment access.

Authorization and least privilege

Implement role-based access control (RBAC) or attribute-based access control (ABAC) at API gateways and at the agent runtime. Permission checks should be enforced centrally and at the service boundary.

Provide fine-grained permissions for knowledge base access, retrieval scopes, and write capabilities (e.g., read-only vs. write-to-queue).

Avoid “all-access” service accounts. When an agent needs broader rights (e.g., to orchestrate), justify and log that elevation.

Encryption and data protection

Encrypt data in transit using TLS and in storage using strong encryption keys managed by a dedicated Key Management Service (KMS).

For highly sensitive content, consider field-level encryption so that agents only decrypt what they need to process.

Manage keys centrally with automated rotation and strict audit trails. Where possible, maintain cryptographic separation between agent identities and data-encryption keys.

Knowledge base access and provenance

Treat knowledge sources as first-class security assets. Employ access controls on vector stores, document repositories, and external APIs.

Add provenance metadata to every retrieval: which index or source was hit, what query vector or selector was used, and the timestamp. Store provenance alongside outputs so any answer can be traced back to its origins.

Treat retrievers as untrusted inputs by default. Have verifier agents re-check facts or cross-validate results across multiple sources before any critical action is taken.

Runtime isolation and sandboxing

Run agents in isolated execution environments or containers with strict resource limits. Sandbox code execution for agents that run arbitrary or user-contributed logic.

Limit agent capabilities at the OS and network layer — e.g., restrict outbound network access to required endpoints, block shell access, and use minimal base images to reduce vulnerabilities.

Secrets management

Never bake secrets into agent code. Use a secrets manager to inject credentials at runtime with ephemeral leases.

Rotate secrets frequently and audit all retrievals. Deny access to secrets unless a just-in-time approval or policy check passes.

Observability, detection, and response

Centralize logs, traces, and metrics for inter-agent calls. Correlate events across agents to quickly spot anomalous patterns or lateral movement.

Implement alerts for suspicious behaviors: unusual retrieval volumes, repeated access denials, or unexpected agent interactions.

Maintain playbooks for incident response that include steps to isolate or revoke agent credentials, reproduce the attack path, and notify stakeholders.

Operational practices that make security realistic

Security is only effective when it’s operationally sustainable. These practices help teams maintain protections without slowing innovation.

- Secure-by-default templates: Provide vetted agent templates that include API auth, RBAC, logging, and secrets handling baked in so developers start from a safe posture.

- Automated CI/CD checks: Integrate security gates into agent deployments — static analysis, dependency scanning, and runtime policy checks.

- Policy-as-code: Express authorization, data handling, and access policies in code so they are versioned, reviewed, and testable.

- Red teaming and chaos testing: Regularly test agent networks for failure modes and adversarial inputs to validate detection and containment capabilities.

- Transparent audit trails: Keep immutable logs (WORM or append-only) of agent actions that satisfy auditors and regulators, while supporting operational debugging.

- Rate limiting and quotas: Apply per-agent and per-team quotas to prevent accidental overloads or runaway costs from malicious loops.

Human oversight and governance

Security in multi-agent systems is as much social as it is technical. Good governance aligns incentives, clarifies responsibility, and ensures ethical use.

Define ownership for each agent: who maintains it, what data it can access, and how it should behave under failure.

Establish escalation paths and approval workflows for agents that perform high-impact actions (financial transfers, legal filings, PHI access).

Create a review cadence where security, compliance, and domain teams validate agent behaviors and knowledge base changes.

Maintain clear documentation of agent capabilities, expected inputs/outputs, and risk assumptions so no non-technical stakeholders can assess the system’s trustworthiness.

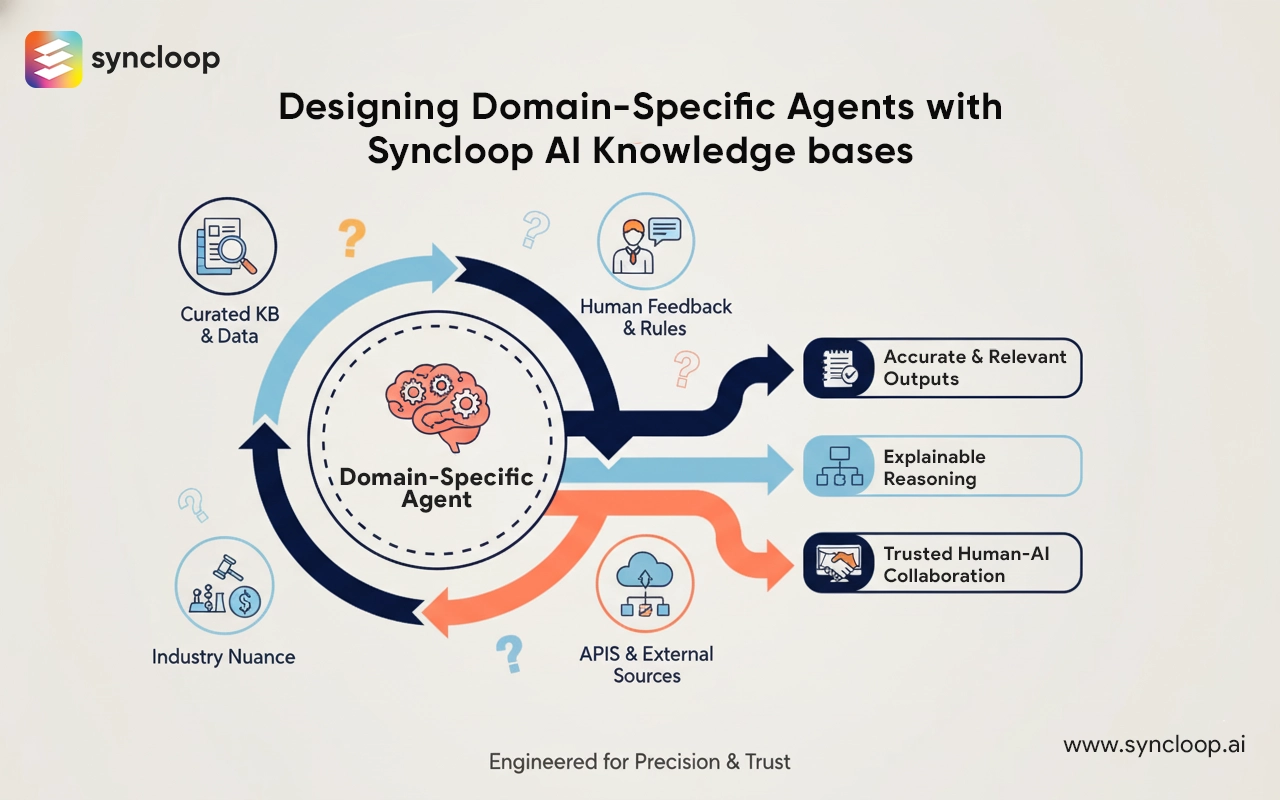

A human-centered approach to secure automation

Technology is only useful if people trust it. Present security as an enabler of confidence, not a roadblock. Use clear visualizations and provenance reports so end users can see why an agent made a decision. Provide easy override mechanisms when human judgment is required. When people understand how the system protects their data and why agents act the way they do, adoption accelerates.

Conclusion

Securing inter-agent communication in Syncloop AI environments is both a technical discipline and a cultural practice. By applying zero-trust principles, enforcing least privilege, protecting knowledge sources, and baking security into developer workflows, organizations can scale intelligent automation without surrendering control. Syncloop AI’s API-first, modular design lends itself to these security practices: authentication, fine-grained authorization, provenance, and observability are not add-ons — they are essential features that make automation trustworthy.

The future of work depends on systems that act autonomously yet transparently, that accelerate human decisions rather than obscure them. When security and design move together — when agents operate within well-defined boundaries and their reasoning is auditable — Syncloop AI becomes more than an automation platform: it becomes a partner that organizations can rely on to act responsibly at scale.

Back to Blogs