Visual-First AI Development: Why Seeing Your Agents Work Changes Everything

Posted by: Andrew Smith | January 21,2026

The shift from code-centric to visual-first development isn't about making things easier for non-coders. It's about giving everyone including expert developers—better tools to understand, debug, and collaborate on complex AI systems.

There's a scene that plays out in AI teams everywhere: the engineer staring at log files, trying to understand why an agent made a particular decision. Scrolling through JSON payloads. Grepping for timestamps. Mentally reconstructing the sequence of events that led to an unexpected outcome.

This is the standard debugging experience for multi-agent AI systems, and it's fundamentally broken.

Not because log analysis is bad—it's essential—but because when you're trying to understand complex interactions between multiple agents, humans, and systems, text-based debugging creates a cognitive overhead that slows everything down.

The Log File Bottleneck

Consider what happens when a multi-agent system produces an unexpected result. In a typical code-first environment, you start by gathering logs from each component. Then you correlate timestamps to reconstruct the sequence of events. You trace data transformations through each stage. You identify where the logic diverged from expectations.

This process can take hours for complex issues. More importantly, it can only be performed by engineers with deep system knowledge. The product manager who needs to understand why an AI feature is behaving unexpectedly can't participate meaningfully in this investigation. The compliance officer who needs to verify decision logic is dependent on someone translating logs into human-readable explanations.

The log file bottleneck isn't just a debugging inconvenience. It's an organizational constraint that limits who can meaningfully participate in AI development and operations.

What Visual-First Actually Means

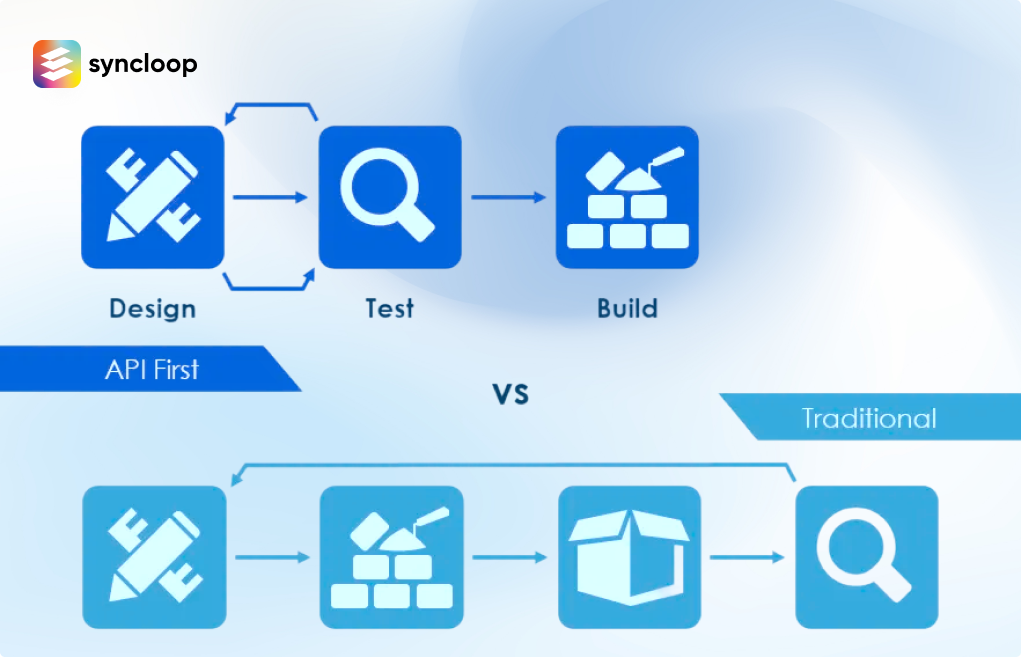

Visual-first development is often misunderstood as drag-and-drop coding for non-technical users. That's a narrow interpretation that misses the real value.

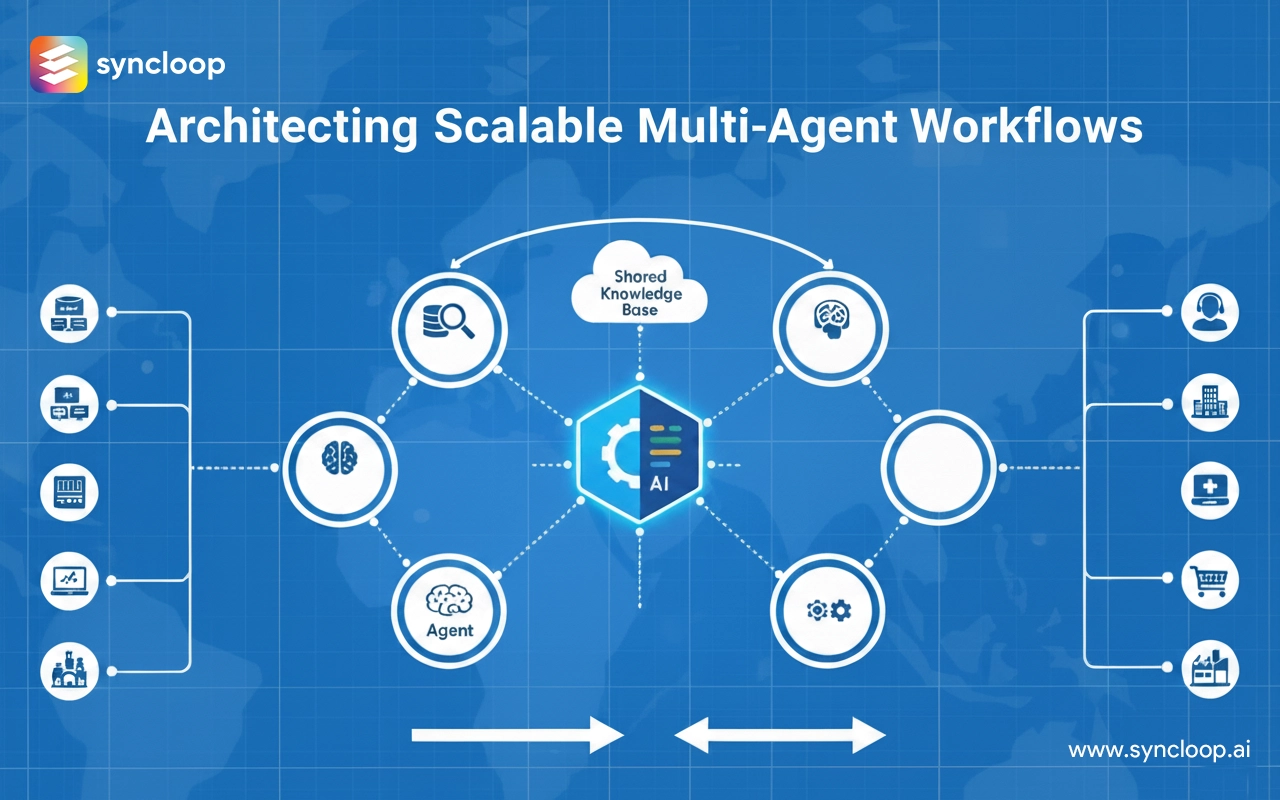

True visual-first development means building AI systems where the state, flow, and behavior are inherently visible. Where you can watch agents collaborate in real-time. Where decision paths are rendered graphically rather than reconstructed from traces. Where the current state of the system is always observable, not inferred from scattered data points.

Think of it like the difference between reading a screenplay and watching the movie. Both convey the same story, but the visual format makes patterns, pacing, and interactions immediately apparent in ways that text simply cannot.

For AI systems specifically, visual-first development provides real-time visualization of agent-to-agent communication, clear representation of decision branches and their outcomes, immediate feedback on how changes affect system behavior, and shared understanding across technical and non-technical team members.

The Collaboration Dividend

One of the most underappreciated benefits of visual development is what it does to team dynamics. In code-first environments, AI development often becomes siloed: engineers build and maintain the system while everyone else works from documentation and second-hand explanations.

Visual environments break this pattern. When a product manager can watch an agent workflow execute and ask questions about specific decision points, collaboration becomes real-time rather than asynchronous. When a compliance officer can see the exact logic path that led to a particular decision, audits become conversations rather than investigations.

This collaboration dividend compounds over time. Teams that can reason about AI systems together make better decisions faster. They catch issues earlier. They iterate more rapidly because feedback loops don't require translation through documentation.

Debugging Multi-Agent Systems Visually

Let's get specific about how visual debugging changes the game for multi-agent AI.

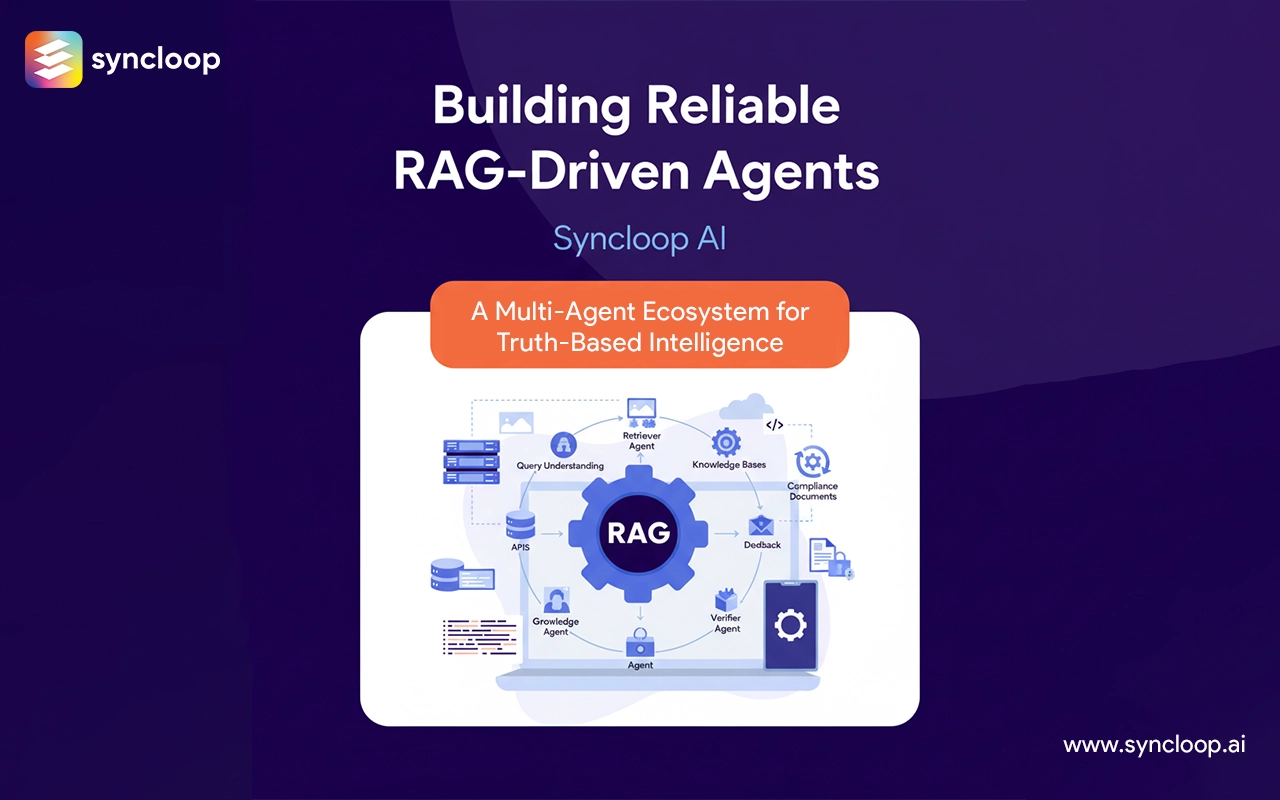

In a traditional debugging scenario, an agent produces an unexpected output. You start by identifying which agent produced the output, then trace back through the chain of agents that contributed to that decision. For each agent, you examine inputs, processing logic, and outputs. You correlate timing to understand the sequence. You look for where the actual behavior diverged from expected behavior.

In a visual-first environment, you watch the workflow execute. You see data flow between agents in real-time. When something unexpected happens, you can pause, inspect state, and step through execution. The visual representation makes patterns visible that would be invisible in log analysis: bottlenecks, loops, unexpected branches, context loss at boundaries.

More importantly, visual debugging is shareable. You can walk a colleague through an issue by showing them what's happening rather than describing it. You can record executions for later analysis. You can compare visual traces across different runs to understand what changed.

The Expert Developer Use Case

A common objection to visual development is that expert engineers don't need it—they're comfortable with code and log analysis. This objection misunderstands the value proposition.

Visual-first development isn't about replacing code skills. It's about providing additional leverage for experts. The same engineer who can read logs and trace issues manually can work faster and more effectively when the system renders behavior visually. They can still drop into code when needed—in fact, the best visual environments provide seamless transitions between visual and code-level interaction.

Consider how expert developers work in other domains. Database administrators use visual query plans even though they can read execution plans in text. Network engineers use visual topology diagrams even though they can parse routing tables. The visual representation accelerates understanding without removing access to underlying detail.

For AI systems, where agent interactions create emergent complexity, visual representation is even more valuable. The combinatorial explosion of possible agent interactions makes text-based analysis increasingly impractical as systems grow.

Building for Visibility from Day One

If you're evaluating platforms for multi-agent AI development, treat visibility as a first-class requirement rather than a nice-to-have feature.

Ask specific questions: Can I watch agent workflows execute in real-time? Can non-technical stakeholders understand what's happening without translation? Can I pause, inspect, and step through execution? Is the visual representation integrated with the development environment or bolted on as an afterthought?

The answers reveal whether visibility is architecturally baked in or superficially added. True visual-first platforms are designed from the ground up with observation as a core capability. Retrofitted visual layers tend to be incomplete and disconnected from the underlying system state.

The shift to visual-first AI development mirrors similar transitions in other domains: from text-based CAD to graphical design tools, from command-line version control to visual Git clients, from hand-drawn circuits to electronic design automation.

In each case, the transition wasn't about making things easier for beginners. It was about giving experts better tools to handle increasing complexity. As multi-agent AI systems grow more sophisticated, visual development isn't optional—it's how capable teams will stay productive in the face of complexity.

Back to Blogs