Multi-Agent Systems Explained: When AI Agents Work Better as Teams

Posted by: Nickolas John | January 28,2026

A single AI model can answer questions. A team of specialized agents can run your operations. Understanding when and why to move from single-agent to multi-agent architectures.

Ask ChatGPT to summarize a document, and it performs admirably. Ask it to analyze a regulatory filing, cross-reference against historical data, check for compliance violations, draft an alert, and route exceptions to the appropriate human reviewer—and you'll quickly hit limitations.

This isn't because the underlying model lacks intelligence. It's because complex, multi-step processes require coordination that single-model architectures weren't designed to provide.

Multi-agent systems address this gap by distributing work across specialized agents that collaborate—much like a high-functioning team distributes work across specialists who coordinate their efforts.

What Makes a System Multi-Agent

The term "agent" gets thrown around loosely in AI discussions. For clarity: an AI agent is an autonomous system that perceives its environment, makes decisions, and takes actions to achieve specific goals. It's more than a model endpoint—it has context, tools, and agency.

A multi-agent system is exactly what it sounds like: multiple agents working together toward shared objectives. But the key word is "together." Simply running multiple AI models in parallel isn't multi-agent it's parallel processing. True multi-agent systems have:

- Shared Context: Agents have access to common state and can understand what other agents have done and decided.

- Coordinated Execution: There's a mechanism for sequencing work, handling dependencies, and managing handoffs between agents.

- Specialized Roles: Different agents handle different aspects of a task based on their capabilities and access.

- Collective Goal: The agents work toward a shared objective rather than optimizing independently.

When Single Agents Hit Their Ceiling

Single-agent architectures work well for focused, bounded tasks: answering questions, generating content, analyzing data within context window limits. They become constrained when:

- Context Exceeds Capacity: Even large context windows have limits. Complex processes involving multiple data sources, long histories, and extensive tool use can exceed what a single agent can hold in working memory.

- Specialization Creates Tradeoffs: An agent optimized for financial analysis may not be optimal for customer communication. A single-agent approach forces compromises; multi-agent allows specialists.

- Parallel Processing Matters: Some workflows benefit from simultaneous execution. A single agent processes sequentially; multiple agents can work in parallel and converge results.

- Human-AI Collaboration Requires Handoffs: Real-world processes often need AI handling routine work while humans manage exceptions. This requires coordination logic that single-agent setups struggle to provide cleanly.

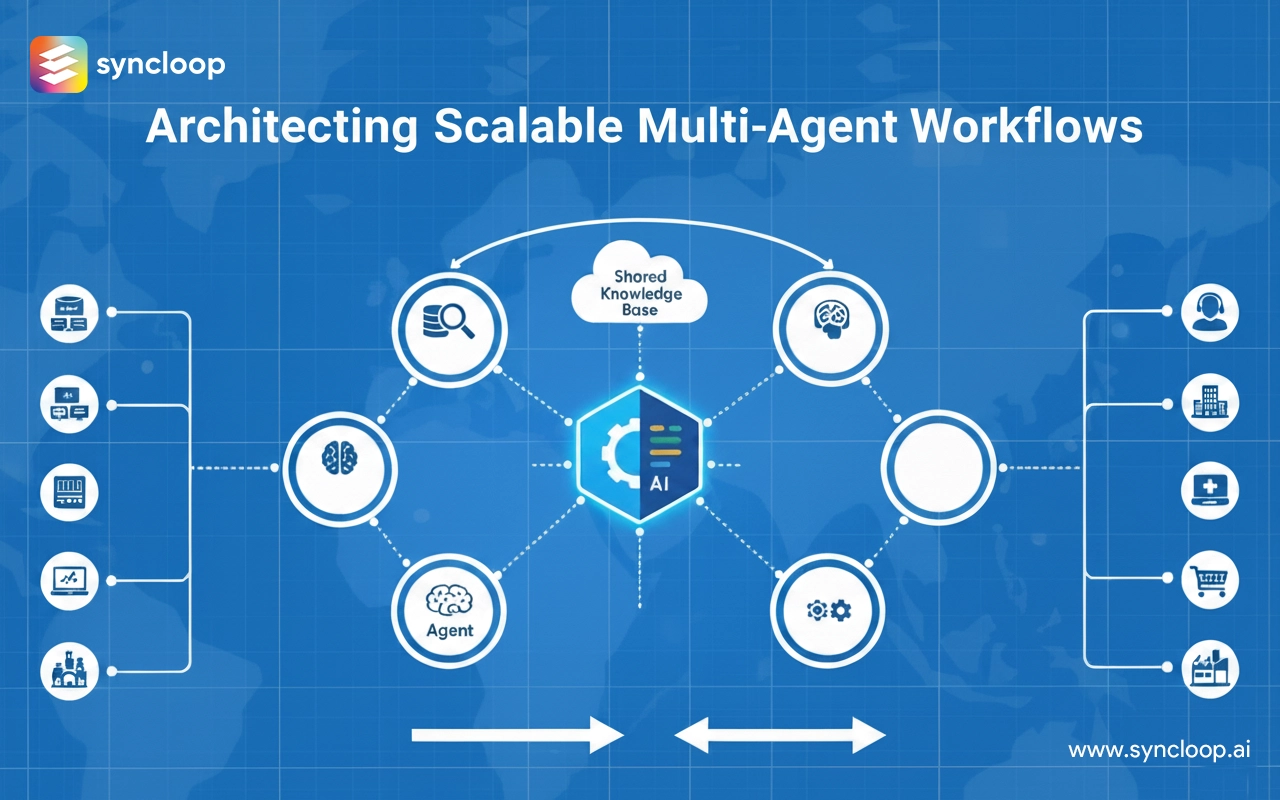

The Anatomy of Multi-Agent Coordination

Effective multi-agent systems share common architectural elements that enable coordination:

- Orchestration Layer: Something needs to manage agent sequencing, dependencies, and state. This can be a central coordinator agent, distributed consensus, or workflow-based routing.

- Shared Memory: Agents need access to common state without passing full context on every interaction. This might be vector databases for semantic memory, traditional databases for structured data, or in-memory state for real-time coordination.

- Communication Protocols: How do agents pass information, request help, and signal completion? Well-designed multi-agent systems have explicit protocols rather than ad-hoc message passing.

- Error Handling: When one agent fails or produces unexpected output, what happens? Robust systems have retry logic, fallback paths, and graceful degradation.

Common Multi-Agent Patterns

Several architectural patterns have emerged as effective approaches to multi-agent design:

- Supervisor Pattern: A central agent receives tasks, delegates to specialist agents, aggregates results, and handles exceptions. Clear hierarchy, straightforward debugging, but potential bottleneck at the supervisor.

- Pipeline Pattern: Agents arranged in sequence, each processing and passing to the next. Well-suited for linear workflows with clear transformation stages. Less flexible for branching logic.

- Collaborative Pattern: Agents work together as peers, negotiating work distribution and coordinating directly. More complex to implement but handles ambiguous situations better than hierarchies.

- Hybrid Pattern: Combines elements of the above. Common approach: supervisor handles routing and escalation while specialist agents operate semi-autonomously within their domains.

The Human Element

The most effective multi-agent systems don't try to eliminate human involvement—they optimize for it. Human workers become another type of agent in the system, handling tasks that require judgment, creativity, empathy, or accountability.

Good human-AI coordination in multi-agent systems involves intelligent routing that sends work to humans only when AI can't handle it reliably, context preservation so humans receive full background when they're brought in, escalation paths that make it easy for humans to override or redirect AI decisions, and audit trails that show exactly what AI did and why, supporting human oversight.

This isn't about AI replacing humans. It's about freeing humans from routine work so they can focus on what requires human judgment.

Evaluating Multi-Agent Readiness

Not every AI application needs multi-agent architecture. Here are signs that a use case is a good fit:

- Process complexity exceeds single-model capacity If you're chaining prompts and losing context, multi-agent may help.

- Specialization would improve outcomes. If different parts of your process need different expertise, specialized agents outperform generalists.

- Parallel execution would improve speed. If independent subtasks could run simultaneously, multi-agent unlocks parallelism.

-

Human involvement is part of the process. If humans need to review, approve, or handle exceptions, multi-agent provides natural integration points.

Multi-agent AI isn't about having more agents. It's about having the right coordination to let specialized capabilities work together effectively. Like a well-run team, the value comes not from individual brilliance but from how capabilities combine.

Organizations that master multi-agent architectures gain the ability to tackle complex processes that single-model approaches simply can't handle. They can blend AI and human judgment seamlessly. They can scale intelligence across their operations without scaling complexity proportionally.

That's not incremental improvement. That's a new capability.

Back to Blogs